30 2026 AI Predictions

Predictions on the path of AI in the next year

Random Observation/Comment #919: If I’m in a time where I am addicted to using my smartphone, then will there be a time where I’m addicted to using my smart assistant? Are we basically there?

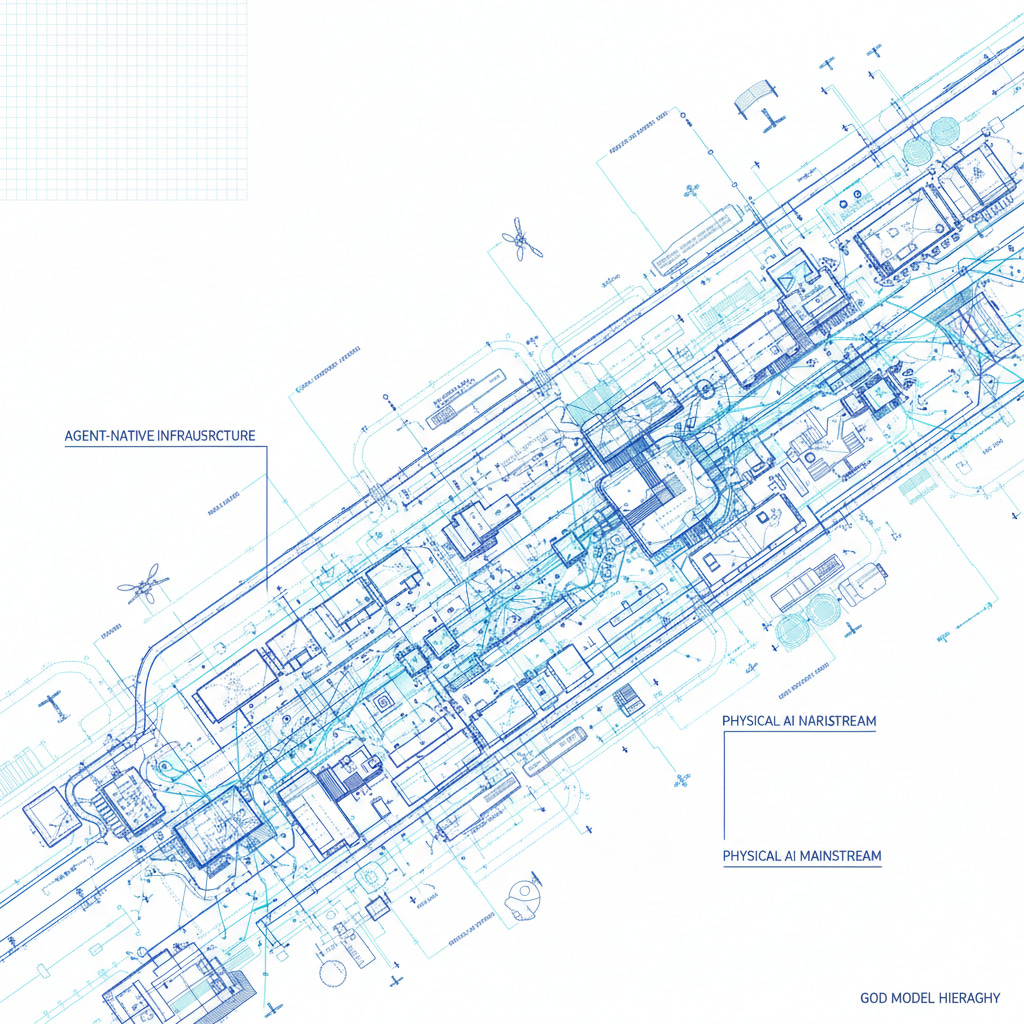

//I don’t know what AI generated, but it looks cool

Why this List?

I spent a normal amount of Clemens time (way too much regular person time) reading about the trends with AI through news and papers. The podcast discussions are interesting and often recycled versions of things I’ve read in other places, but still good to hear someone I respect say it. From a fairly long list across Scott Galloway, All-In, a16z, and many others, I’ve compiled some of the ones that make the most sense to me. I’m not just making these predictions so that I could later be right, but I’m making them because it’s the best way to digest information.

In general, I think the same addictive pattern from social media (where you’re constantly returning to check on comments and notifications) will be a killer part of SLMs on your phone. Since all of the internet is scraped, you’re going to have more personalized live data reviewed by your Clembot digital twin as a part of the Android OS. Imagine just getting pinged “How was your meeting? I have notes from Zoom that I can share and start conversations with your coworkers.”

Pivot to Production - I remember saying this for blockchain in 2019, but it won’t stop me from saying it for generative AI in 2026. I think this year will probably see some more mature technologies quietly shift from experimental AI in organizations to broad enterprise deployment anchored to measurable business outcomes.

Agent-Native Infrastructure - Legacy backends designed for 1:1 human-to-system interaction will break under “agent-speed” workloads. Yes, we already have CAPTCHA and other methods to stop bots that have been around since the beginning of internet accounts, but it’s so much easier to build believable interactions now. The new control plane must handle recursive, millisecond-level thundering herds of 5,000+ subtasks. We’ll likely see an increase of cloud bills.

Physical AI going Mainstream - Intelligence isn’t just screen-based, but starts moving towards robots like drones and autonomous vehicles. The transition from smart warehousing into mainstream city streets and infrastructure will be applied for optimization.

The “God Model” Hierarchy - The market stabilizes into a “pyramid” structure where there are a few massive, centralized “god models” (like supercomputers) at the top, supported by millions of specialized, low-cost “small models” at the edge. In another sense, the Small Language Models (SLM nano models) on the Android OS will likely pull information from a smarter model (but save on a lot of costs for storage, bandwidth, and compute).

Multimodal Creation as Standard - Users will collaboratively generate or edit entire 3D scenes and complex works by feeding all forms of reference content like images, video, and sound so that it becomes a single model. This could get pretty scary with some of the normal human intuitive authentication if we’re able to copy people on many of these vectors.

The “Year of Me” - Consumer products will move from mass-produced to hyper-personalized. This means there could be more AI tutors and health assistants that will adapt in real-time to an individual’s pace, routines, and biology.

China-US Bipolarity - AI development becomes a two-horse race between China (DeepSeek, Qwen) and the US. Based on the latest EU AI Acts with strict regulation, then Europe risks falling behind on adoption.

Silicon Workforce Management - AI agents are not just software, but a trend like “globalization” through a “silicon-based workforce.” This will require dedicated onboarding, performance tracking, and Financial Operations management frameworks. If I make a Clembot so good that you can rent it and it can take over some tasks for you, then is Clembot within the IP of a company that then acts as a consultant to the enterprise? Do you pay Clembot based on output and results of OKRs instead of hours worked? Can I do rentable “AI-as-a-service” rather than software recurring monthly costs? Is my AI bundled, rebundled, or just a straightforward tool used by an orchestrator/manager of AIs?

Vertical AI “Multiplayer Mode” - Professional software (legal, healthcare) evolves from simple reasoning to coordinating among multiple parties. This could include buyers, sellers, and advisors with distinct permissions and context. If multiple companies can work off of the same shared project with proper permissions then the communication medium of Slack might change all together.

Inference Economics Reckoning - As usage explodes, enterprises will hit a cloud-cost tipping point where many will repatriate consistent production workloads to on-premises purpose-built AI data centers. There may be a point where AI optimization depends on the cost of the results and allocations of infrastructure instead of specific human resource hiring. The “human resource” aspect of this may fall under AI compute resourcing and infrastructure allocations.

Chip Surplus & Price Collapse - Following the historical law of “shortage leads to oversupply,” massive build-outs of GPUs will eventually result in a supply glut and a collapse in the unit cost of running AI models. The cost to zero might now be intelligence. A piece I wrote about costs going to zero (when I was first writing about web3 digital asset issuance):

Systems of Coordination - “Systems of record” (CRMs, ERPs) will lose ground to a new orchestration layer that manages multi-agent interactions and creates a cross memory context between siloed data. I do smell a the data lake play coming back from 2009.

Autonomous Labs - The most obvious boost of productivity is in research. Super driven PhD students will probably spend less time writing papers and more time on executing real life experiments. These laboratories can accelerate scientific discovery in life sciences and chemicals with protein folding and other experiments with limited human intervention. Models and simulations should definitely be a lot faster.

Personal AI Memory - New frontier models (like GPT-6) will move from synchronous responses to these “long-horizon” tasks that utilizes deep personal memory to understand a user’s long-term habits and quirks. This can just be an increase of context or an ability to save thread-based memory into longer-term memory (like through sleep for converting short term memory to long term). Maybe my AI needs to sleep.

Cyber Defense at Machine Speed - AI breaks the cybersecurity hiring crisis by automating repetitive Tier 1 work, which allows human security teams to focus on active threat hunting. There’s probably already some amazing branch commit QA testing that happens on the source control level for the Google single main branch.

GEO over SEO - I’m not sure if the terms Generative Engine Optimization (GEO) or AI Engine Optimization (AIEO) gets fully adopted, but there’s going to be a different way of discovering news when people start using Gemini AI overviews and change their habits to use LLMs for discovering answers or learning about subjects. Vibe coding has already been a huge change in the coding realm because developers no longer just need to read API docs, but can instead ask Claude Code to write the integration examples and pull from available resources. This GEO/AIEO may take a larger share than normal from SEO, which changes the marketing success metrics by looking at semantic richness and being cited in AI-generated answers rather than keywords or backlinks.

Step Inside Video - Video generation through Sora and Veo3 are absolutely ridiculous already. I think the next step is for video to evolve from being just a passive medium. The world models will allow users to explore generated environments like games, with characters and objects maintaining “quiet consistency”.

The “Know Your Agent” (KYA) Primitive - As non-human identities outnumber humans, financial services will require cryptographically signed “KYA” credentials to link agents to their human principals and liability. Hopefully this isn’t like MCP capabilities where everything is stored on an ENV file and can easily get leaked.

Electro-Industrial Stack - Software that takes the wheel of electric vehicles, drones, and data center will evolve into powering electronics. This means that the algorithm data will be even more important.

Productivity Explosion - AI investment supports a “productivity surge,” potentially driving U.S. real GDP growth toward 3%. I do think the benefits will be unevenly distributed across sectors. I also think the smarter people will not claim 2x or 3x productivity, but rather build at the right pace with multiple layers of fact checking and review.

Value-Based Pricing - Startups shift away from “pay-per-token” toward value-based pricing. Similar to the AI-as-a-Service side of things, we’ll probably be sharing in the productivity gains these AI bots or startups provide to specialized professionals like doctors or lawyers.

Privacy as the Crypto Moat - In DeFi, privacy becomes the ultimate network effect. Enterprises still want to keep their secrets on how to manage their portfolios so they don’t leak information to public adversarial entities.

Search for More Data - With all of the internet data exhausted in training (yes, we probably trained it all already), the next frontier will move to other points of collecting real-time data. Startups look everywhere to extract, structure, and license the information trapped in unstructured and uncompressed raw data. LLMs are definitely very good at compressing and summarizing information.

Multi-Agent Orchestration - Business value will be delivered through coordinated digital teams where specialized agents can collaborate autonomously. I still think they will be best controlled within a simulation with shared knowledge, but there could be a time where each consultancy agency submits their own AI consultant to collect requirements and communicate with each team’s AI knowledge hubs to learn information.

AI-Native Universities - Traditional education transforms into “adaptive academic tutors” where learning paths can evolve in real-time and students are assessed based on how they can interact with these tutors.

Banking Infrastructure Upgrade - Tokenized assets and stablecoins will finally trigger a core bank ledger upgrade (calling it again even though we said there were big enough projects back in 2022). What will likely happen is that any new technology will become just one more standard for the regulatory teams to support. Can you believe there are some banks that still send their information through fax infrastructure?

Sustainable Infrastructure Innovation - To manage grid stress, data centers will move toward more sustainable energy options. This could include modular nuclear energy, “underwater sinks,” or even orbital solar-powered compute.

Hybrid Workforces - The standard operating model (maybe even within SOWs) will be “blended” teams of human specialists and agentic modules, with humans focused solely on handling complex “edge” cases. Maybe these hybrid costs are cheaper than human premium labor?

Reinforcement Learning Shift - The next frontier of models (GPT6/Gemini4) moves beyond simple pre-training to heavy reinforcement learning from accounts. I think it will be much more engaging and interested in asking questions to sustain a conversation than just be an answering bot. This could be pretty dangerous because if you optimize on platform usage based on addictive dark patterns (like social media amplifying bad news for negative reactions) then I can imagine a digital assistant creating more engagement and usage of the LLM through notifications and other straightforward engagement patterns. If my Clembot starts saying “How was that call? It sounded like a lot of information, do you want to talk about it?…. Great, let me write up some notes for you…” Man that’s wild.

Finally a year for voice - I do think a reinvention of an app interface on screens will intuitively be through voice and secondary personalized admin pages with more transparent algorithm training. If we can define our algorithm and understand who we are by what we watch then maybe we can train ourselves to be better. We can move into the READ, WRITE, OWN, TRAIN phase.

~See Lemons look forward to 2026 Clembot

My Clembot AI Journey

Random Observation/Comment #878: I’ve carefully considered what I’ve outsourced to Clembot and the process of writing lists is definitely not fully one of them. I don’t want to stop exercising the part of my brain that does hard things.